Voxie uses Momento Cache to accelerate Lambda processing and reduce costs for AI-powered SMS

A collaborative relationship with Momento yields incredible results for Voxie’s AI conversational marketing tech.

Industry: Marketing Technology, AI/ML

Use Case: Asynchronous Job Status Storage, Rate Limiting, Caching API Responses

About Voxie

Our purpose at Voxie is to automate customer experience touchpoints through AI-powered SMS. We tailor our approach to match the needs of each brand we work with, helping them connect with the right customers in real-time with automated, personalized, and highly accurate conversational marketing—ultimately driving business growth and customer satisfaction.

Solution Overview

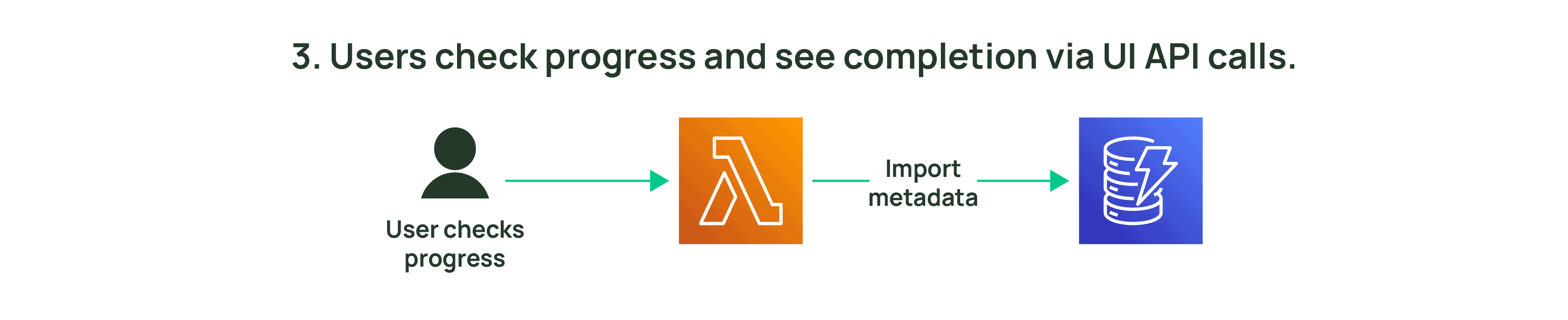

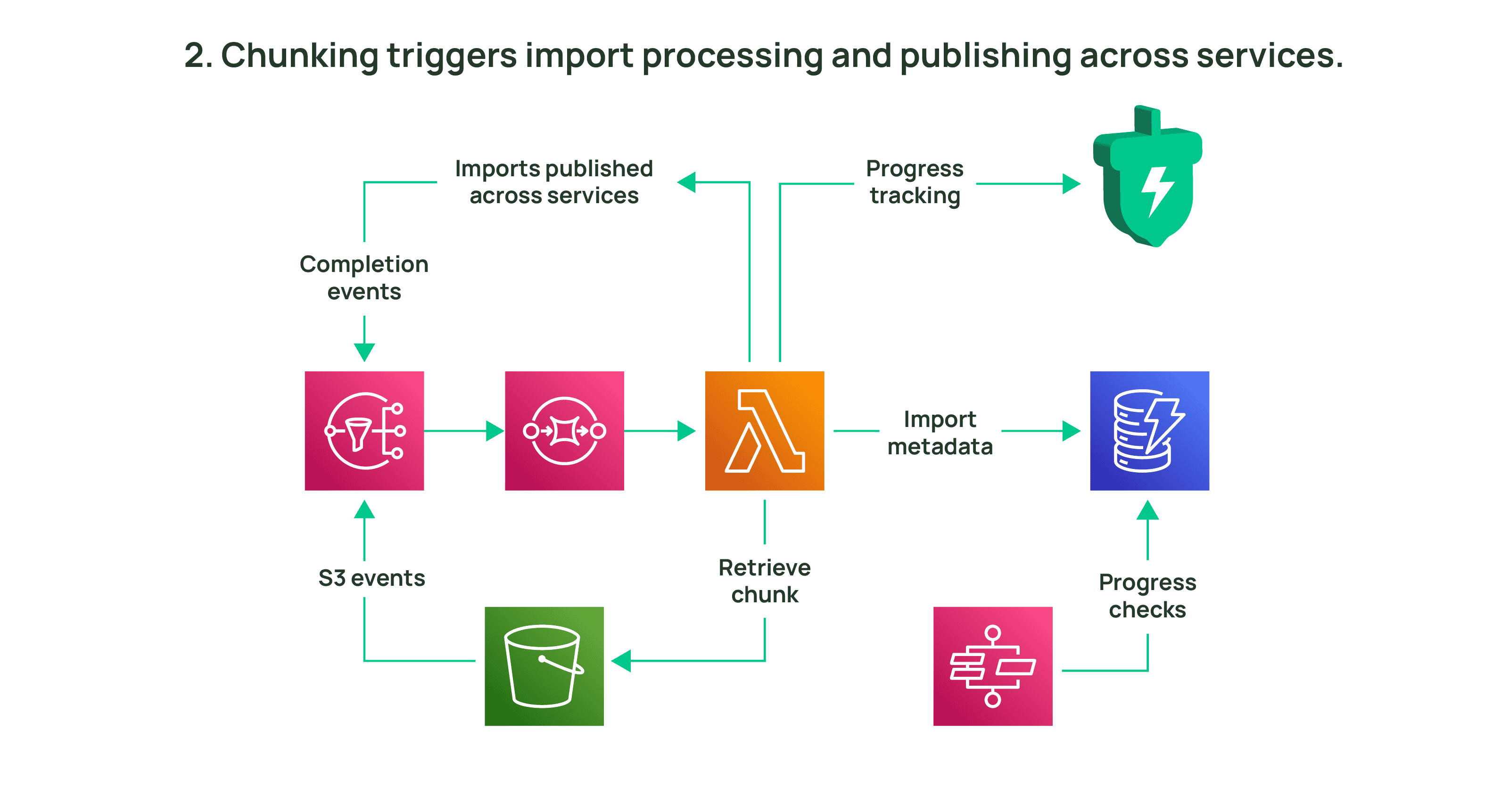

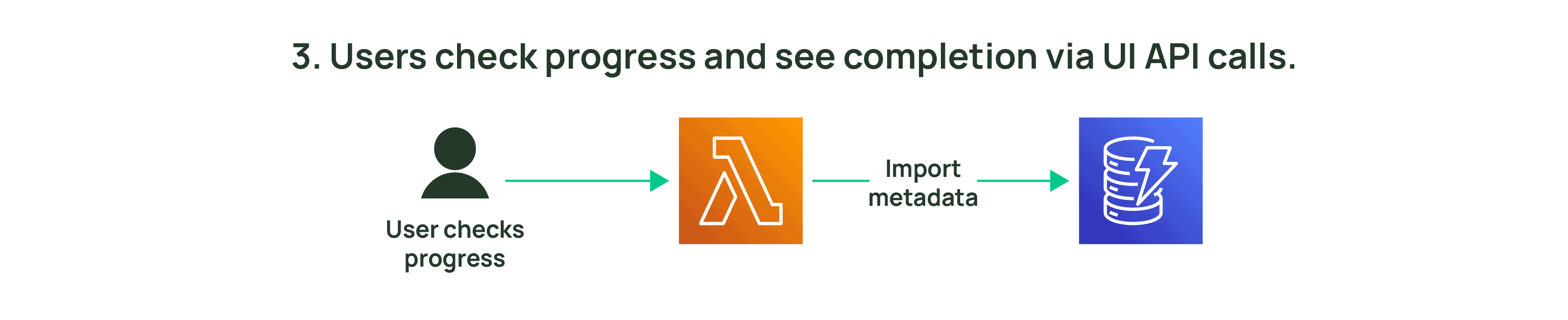

One of our workflows is an imports and targeted segmentation system that ingests large files and splits them into chunks for easier digestion. We then use Momento Cache as a centralized counter across our distributed chunk processing. We’re also harnessing Momento Cache to impose rate limits for our public APIs and cache responses from other stores, including Amazon DynamoDB and AWS Key Management Service.

Initial State

Our imports and targeted segmentation system previously utilized a serverless time series database for both calculations and progress tracking. However, as our data volume and associated load escalated, the system could not meet our growing demands.

Realizing the need for a shift in direction, we took a fresh look at our requirements and broke them down to basics: we needed efficiently scalable, high-velocity writes with the ability to count entries directly. As our data is short-lived, we didn’t need database-level durability. This led us to consider in-memory stores like Redis, but we preferred a truly serverless experience to offload as much of the management effort as possible.

Product Discovery

We’d heard about Momento’s new product for caching that promised to fulfill our requirement for a serverless in-memory datastore. When we reached out to connect with them, the Momento team spent considerable time digging into the details of our needs and brainstorming with us about possible solutions. This collaborative interaction was quite refreshing, and early validations of performance characteristics for Momento Cache were also positive.

Ultimately, we chose to move forward with Momento both because of the performance characteristics of the product and because of the relationship built with the Momento team. We came from a poor product and service relationship with our previous solutions, and the time spent to understand our use case and give feedback on how Momento could solve our problems drove our desire to work with the team.

Implementation

The initial deployment of our Momento solution for distributed counting was successful in less than three weeks—even with minor complications. The Momento team was responsive and collaborative through the whole process. Certain behaviors of the Momento SDK for PHP didn’t fit our configuration, but were promptly adjusted. We were even able to directly contribute to the improvements as the SDK is open source. Additionally, when we found optimization issues concerning segmentation size and count, we tackled them together with Momento engineers through a combination of client- and service-side adjustments.

Momento also underpins the rate limiting we impose on our public APIs and aids in caching expensive calls to other stores. Momento Cache is much more than just a simple caching service—with its collection data types like dictionaries, lists, sets, and sorted sets, it addresses a broad swath of our data needs. The constructive engagement with Momento and their swift product enhancements have consistently exceeded our expectations, thus allowing us to quickly construct and test improvements to our own product. For Voxie, the Momento experience is not just about solving problems, but also about enjoying the process of giving feedback and seeing it yield results.

Results

Momento continues to impress us while providing a truly serverless solution—with Momento Cache performing admirably across our use cases and their team working rigorously to improve their capabilities for our specific needs.

Besides replacing our previous vendor products, Momento has also reduced our serverless compute spend by accelerating Lambda processing time by up to 100x—all while reducing our operational surface area as an engineering group. This cost and time efficiency attest to Momento’s vital role in enhancing our service offerings. Thanks to Momento, we can get our ideas into production even faster.

Future Implementations

Our team looks forward to expanding our partnership with Momento. Right now, we’re in the process of migrating our pub/sub workflows to Momento Topics.

Reduce costs and improve performance for AI messaging applications with Momento Cache. Sign up and try it out today.

Don’t Miss Our Latest News

Subscribe to our newsletter and get all the news from Momento.

No spam.