How to fix connection timeout issues with AWS Lambda in VPCs

Address connection timeouts with AWS Lambda in VPCs by understanding networking limits.

Preparing for their next product release, a Momento customer faced critical connection establishment timeouts to Momento Cache during load tests on AWS Lambda. These tests simulated the highest and most sudden activity—a best case scenario for customer adoption, though an expensive one to run. Because Momento was key to their serverless architecture, the timeouts risked launch readiness and budget to reproduce.

Striving for the best customer experience, Momento drove the investigation. Not only did we uncover a straightforward solution, but we also discovered crucial details regarding Lambda’s behavior within Virtual Private Clouds (VPCs). Anyone running Lambda in a VPC at scale should take heed.

Pulling the thread

We began by investigating our service. Load balancer and internal service metrics showed no signs of distress or elevated error rates. Indeed, internal load tests far exceeded the customer’s connection load without issue. With this finding, we shifted our attention from Momento to the customer’s architecture.

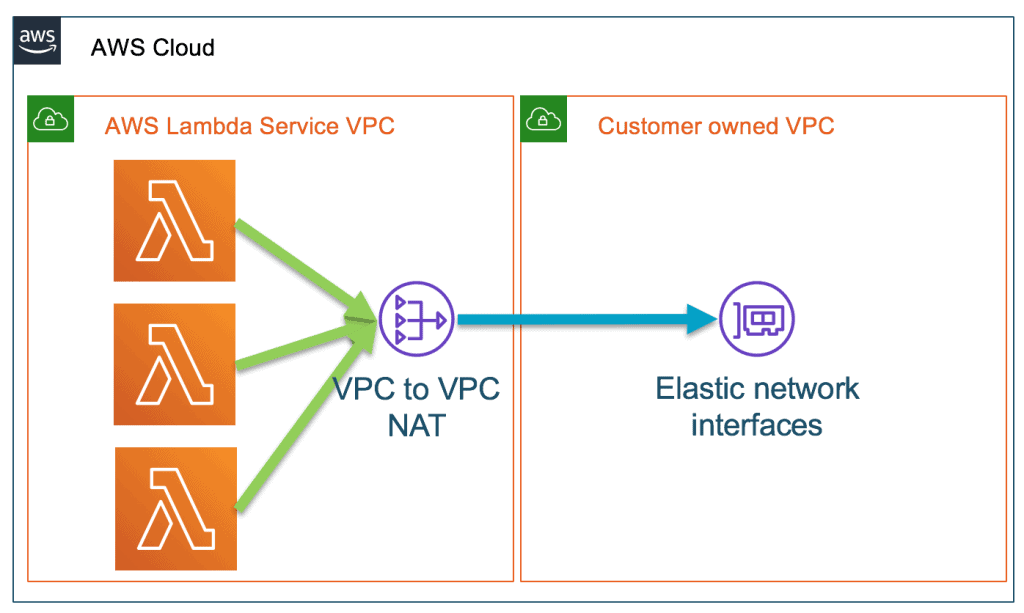

In this case, the customer had a Lambda function in their VPC connecting to Momento via a NAT Gateway. Although a less common setup, Lambda-in-a-VPC proved necessary for them to use other AWS services from Lambda.

Reproducing this setup and driving high connection concurrency to Momento, we initially found connection establishment issues on the NAT Gateway. Pulling the thread further we uncovered another, more specific cause: the under-the-hood networking from the Lambda service VPC to the customer VPC. While built on a scalable internal service called Hyperplane, the Hyperplane Elastic Network Interfaces (ENIs) did not scale to handle the bursts of new connections from the Lambda function. Instead, the Lambda functions exhausted the network interfaces’ capacity and connections to Momento simply timed out.

Diagnosis and key findings

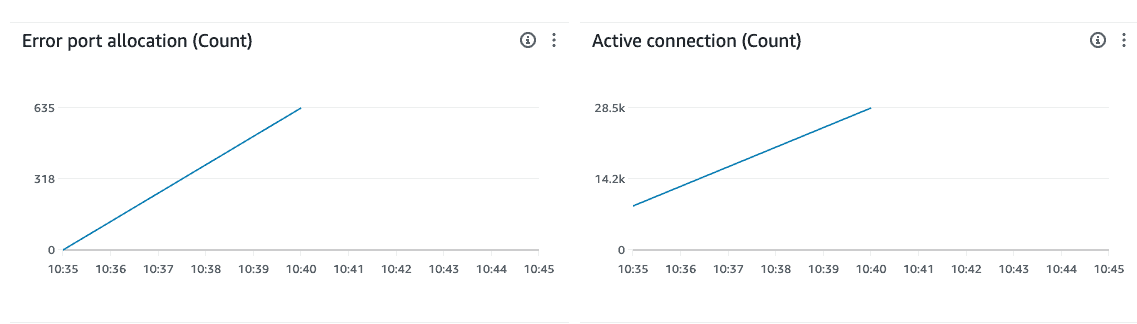

In order to root cause, we set up test environments to drive a large number of connections to Momento and monitored active connection counts on the NAT Gateway. Our key findings were:

- NAT Gateway Bottlenecks: NAT Gateways fail to open connections once saturated. This metric is visible in CloudWatch metrics as ErrorPortAllocation. Despite increasing capacity on the NAT Gateway, timeouts remained, ruling out NAT Gateway resource exhaustion as the sole cause.

- Hyperplane ENI Connection Limits: During tests we observed active connections peaking close to a single ENI’s connection limit. This combined with connections failing to establish after 30 seconds highly suggested ENI limitations.

The first limitation encountered was on the NAT Gateway. With their default setup, NAT Gateways can open “at most 55,000 concurrent connections to a unique destination” (source). Any new connection thereafter will fail to open and will be reported as a port allocation error on the NAT Gateway’s CloudWatch metrics.

The second and intriguing limitation is on Lambda service networking. As explained in a 2019 announcement, when a customer runs a Lambda function in their VPC, AWS connects the Lambda service VPC to the customer’s with Hyperplane, an AWS internal networking service. Relevant to our high-concurrency scenario, each Hyperplane ENI has a limit of 65,000 concurrent connections. Unlike for the NAT Gateway and unfortunately for us, this metric is not reported anywhere on CloudWatch.

Interesting sidenote: We were initially puzzled as to why DNS requests for Momento always succeeded whereas immediately following connection requests to Momento timed out. With a clearer picture of the networking, we now understand the DNS requests were routed to the Lambda VPC’s name server, while the Momento connection requests were routed through the Lambda Hyperplane networking and dropped.

The solution: Increase network capacity

We can address each of these bottlenecks in a straightforward way:

- Increase NAT Gateway capacity: Provision secondary elastic IPs on the NAT Gateway. Each IP supports an additional 55,000 connections with total capacity capped at 440,000 connections (see here).

- Provision additional subnets: Because AWS provisions a Hyperplane ENI per VPC-subnet-security-group combination, when we add a private subnet to our VPC, Lambda will provision an additional ENI (see “Lambda Hyperplane ENIs”). Thus we may increase capacity by 65,000 connections for each private subnet we add to a VPC.

Lessons learned and best practices

As a flagship serverless offering, AWS Lambda simplifies resource management for the developer. When run in a VPC, however, this serverless ideal breaks down. When anticipating high connection concurrency, we must capacity plan our networking resources. Estimate peak load and provision NAT Gateway secondary EIPs and VPC subnets accordingly.

When possible, avoid these limitations by running Lambda outside of a VPC. When running in a VPC, PrivateLink may be a more cost effective solution than NAT Gateway while requiring less capacity planning.

Lastly, use client libraries optimized for connection re-use in a Lambda environment. At Momento we have bulletproofed our libraries for this situation.

Conclusion

Our customer was thrilled with this resolution, thanking us for “creating an easy solution… [going] above and beyond just letting it be a risk factor.” In the end, the Momento service held its own and we uncovered an important lesson for the community. While AWS Lambda simplifies the developer experience, when used in a VPC, the developer must be aware of these important considerations.