Faster APIs, faster developers: API Gateway custom authorizers

How to add a custom authorizer and reduce API latencies with remote caching.

In my previous post about speeding up Lambda latencies, we set up a basic REST API on AWS Lambda, AWS API Gateway, and DynamoDB. We then added Momento in to help reduce our latencies and speed up our API endpoint for our end users. Our demo service mimics a basic Users profile service we might see for a typical social network style site. Today we are going to expand this a little further and add a /profile-pic and a /cached-profile-pic endpoint for fetching users avatar images. We also are going to explore securing this endpoint with some custom Authentication logic leveraging a dedicated custom authorizer lambda. After that we will deep dive on the benefits of adding a centralized cache and how serverless architectures and caching go together so well.

What are AWS API Gateway custom authorizers?

Before we dive into building our new API endpoint and securing it, I’d like to cover a little bit about AWS API Gateway custom authorizers and their benefits. Custom authorizers allow you to define a lambda function that gets invoked prior to your service lambda or before an AWS resource you’re trying to proxy to. This function is able to examine the inbound request and perform any authentication and custom authorization needed for your API. It then can craft an access policy on the fly and return back the result, either allowing the request to continue on to the service, or blocking it up front. Encapsulating auth logic into a custom authorizer creates a clean, reusable auth pattern across your service APIs and allows the auth logic to be encapsulated in a standalone component. This enables service teams to move fast, and makes the safest thing to do also the easiest thing to do—which reduces risk of having different implementations across the company’s various API endpoints and services. For more information about AWS API Gateway custom authorizers, check out Alex’s DeBrie’s excellent deep dive on the topic.

Building the new endpoint

The first thing we need to do is actually build our new API endpoint that will be serving up these avatar images. To do that we are going to modify our user model to contain an optional profile_pic property.

interface User {

id: string,

name: string,

followers: Array,

profile_pic?: string

}Then we are going to modify our /bootstrap endpoint to reach out and grab a random profile picture and store it in DynamoDB alongside our other user data as a base64 encoded string. We will be leaving our existing /users/:id と /cached-users/:id APIs as they are and return just id, name, and followers to keep these endpoints lightweight. We will then be introducing a new /pofile-pic/:id and a /cached-profile-pic/:id endpoint that will look up the requested user and just return the base64 encoded image to be rendered on the client.

Adding an API Gateway custom authorizer

Once we have our service wired up to handle our new API endpoint, we are going to add it to our AWS API gateway resource to expose it to users. Before we do that, though, we need to write our custom authorizer Lambda. Ours will be very simple. We’ll call it isFollowerAuthorizer.ts; here’s what it looks like:

import {AuthorizerRequest} from "../models/authorizer";

import {DefaultClient} from "../repository/users/users";

import {UsersDdb} from "../repository/users/data-clients/ddb";

import {getMetricLogger} from "../monitoring/metrics/metricRecorder";

const ALLOW = 'Allow', DENY = 'Deny';

const ur = new DefaultClient(new UsersDdb());

export const handler = async (event: AuthorizerRequest): Promise => {

const methodArn = event.methodArn;

try {

return await customAuthLogic(event.headers['Authorization'], methodArn, event.pathParameters['id'])

} catch (error) {

console.error(`fatal error occurred in authorizer err=${JSON.stringify(error)}`);

throw new Error('Server Error'); // 500.

}

}

const customAuthLogic = async (authToken: string, methodArn: string, requestedUserId: string): Promise => {

const startTime = Date.now()

// Note: in a real api we would want to do a validation check(AuthN) first against

// passed 'authToken' to get the verified user id. Since this is just a simple demo

// we blindly trust the passed simple ID value.

//

// ex: Authorization: 1

// Now perform custom app Authz logic here were checking if the requesting user is

// a follower of the profile pic owner based off path parameter in api resource.

let user: undefined | User;

if (process.env["CACHE_ENABLED"] === 'true') {

user = await ur.getCachedUser(requestedUserId);

} else {

user = await ur.getUser(requestedUserId);

}

if (!user) {

throw new Error(`no user found requestedUserId=${requestedUserId}`);

}

if (user.followers.indexOf(authToken) < 0) {

console.info(`non follower tried to access a profile pic requestedResourceUserId=${user.id} requestingUser=${authToken}`);

return generateAuthorizerRsp(authToken, DENY, methodArn, {})

}

console.info(`successfully authenticated resource request requestedResourceUserId=${user.id} requestingUser=${authToken}`);

getMetricLogger().record([{

value: Date.now() - startTime,

labels: [{k: "CacheEnabled", v: `${process.env["CACHE_ENABLED"]}`}],

name: "authTime"

}]);

getMetricLogger().flush();

return generateAuthorizerRsp(authToken, ALLOW, methodArn, {id: authToken})

}

const generateAuthorizerRsp = (principalId: string, Effect: string, Resource: string, context: any) => ({

principalId,

policyDocument: {

Version: '2012-10-17',

Statement: [{Action: 'execute-api:Invoke', Effect, Resource}],

},

context,

});As you can see, there’s a simple way to toggle if remote caching is enabled, then we either try to look up the user directly in DynamoDB or try to get from our look-aside cache first.

Then we just need to wire up our new API route via CDK with our custom authorizer. We are going to wire up two separate routes: one with a centralized cache enabled and the other with no caching for authorizer and service. These are /profile–pic/:id と /cached-profile-pic/:id. The first one will always go to DynamoDB both for our custom auth check as well as the supporting service. Here you can see how we wire up the endpoints with and without caching enabled in CDK:

// Lambda for custom authorizer with cache

const customAuthLambdaWithCache = new NodejsFunction(this, 'CustomAuthFunctionWithCache', {

entry: join(__dirname, '../../src/functions', 'isFollowerAuthorizer.ts'),

...nodeJsFunctionProps,

environment: {

"RUNTIME": "AWS",

"CACHE_ENABLED": "true"

}

});

// Lambda for custom authorizer with no cache

const customAuthLambdaNoCache = new NodejsFunction(this, 'CustomAuthFunctionNoCache', {

entry: join(__dirname, '../../src/functions', 'isFollowerAuthorizer.ts'),

...nodeJsFunctionProps,

environment: {

"RUNTIME": "AWS",

"CACHE_ENABLED": "false"

}

});

// Read perms for lambdas

dynamoTable.grantReadData(customAuthLambdaWithCache);

dynamoTable.grantReadData(customAuthLambdaNoCache);

// Add profile-img api with custom authorizer that does not use caching

api.root.addResource('profile-pic').addResource('{id}',

{

defaultMethodOptions: {

authorizationType: AuthorizationType.CUSTOM,

authorizer: new RequestAuthorizer(this, 'IsFollowerAuthorizerNoCache', {

authorizerName: 'authenticated-and-friends-no-cache',

handler: customAuthLambdaNoCache,

// Don't cache at GW level we want follower updates enforced

// as quickly as possible for this demo

resultsCacheTtl: Duration.seconds(0),

identitySources: [

IdentitySource.header("Authorization"),

]

})

},

}

).addMethod("GET", svcLambdaIntegration);

// Add cached-profile-img api with custom authorizer that does use caching

api.root.addResource('cached-profile-pic').addResource('{id}',

{

defaultMethodOptions: {

authorizationType: AuthorizationType.CUSTOM,

authorizer: new RequestAuthorizer(this, 'IsFollowerAuthorizerWithCache', {

authorizerName: 'authenticated-and-friends-with-cache',

handler: customAuthLambdaWithCache,

// Don't cache at GW level we want follower updates enforced as quickly as possible for this demo

resultsCacheTtl: Duration.seconds(0),

identitySources: [

IdentitySource.header("Authorization"),

]

})

},

}

).addMethod("GET", svcLambdaIntegration);Testing the endpoint

To start, we’re going to look up basic information for user 0.

$ curl https://j2azklgb6h.execute-api.us-east-1.amazonaws.com/prod/users/0 -s | jq .

{

"id": "0",

"followers": [

"95",

"77",

"22",

"65",

"39"

],

"name": "Relaxed Elephant"

}We can see for user 0 they have 5 followers {95, 77, 22, 65, 39}. Let’s test out our custom authorizer quickly and make sure it works. First, let’s try to fetch the user’s profile picture as follower 22. (Note: your followers for user 0 will be different since they’re randomly generated. Adjust the authorization header value to work for your test user.)

$ curl -s -o /dev/null -w "\nStatus: %{http_code}\n" \

-H "Authorization: 22" \

https://j2azklgb6h.execute-api.us-east-1.amazonaws.com/prod/profile-pic/0

Status: 200

$You can see we get status 200 back. If you look at the body of the response, it is the base64 encoded string of that user’s profile picture. We’re writing the body of response to /dev/null for this test since we are just testing to make sure the custom authorizer is working.

Now if we try again but with the auth header for user 44, who is not a follower of user 0, we get an http 403 status returned from our API and it is explicitly denied.

$ curl -s -o /dev/null -w "\nStatus: %{http_code}\n" \

-H "Authorization: 44" \

https://j2azklgb6h.execute-api.us-east-1.amazonaws.com/prod/profile-pic/0

Status: 403

$Evaluating performance

To compare these endpoints, we’ll test our service API with a simple curl script to hit each API 100 times and then chart some of the custom metrics we are recording to measure latency.

Using the same successful request from the previous test, we are going to hit our profile picture endpoint with cache on and cache off like so:

No Cache:

$ for i in `seq 1 100`; do

curl -o /dev/null -H "Authorization: 22" https://j2azklgb6h.execute-api.us-east-1.amazonaws.com/prod/profile-pic/0 -s

done;With Cache:

$ for i in `seq 1 100`; do

curl -o /dev/null -H "Authorization: 22" https://j2azklgb6h.execute-api.us-east-1.amazonaws.com/prod/cached-profile-pic/0 -s

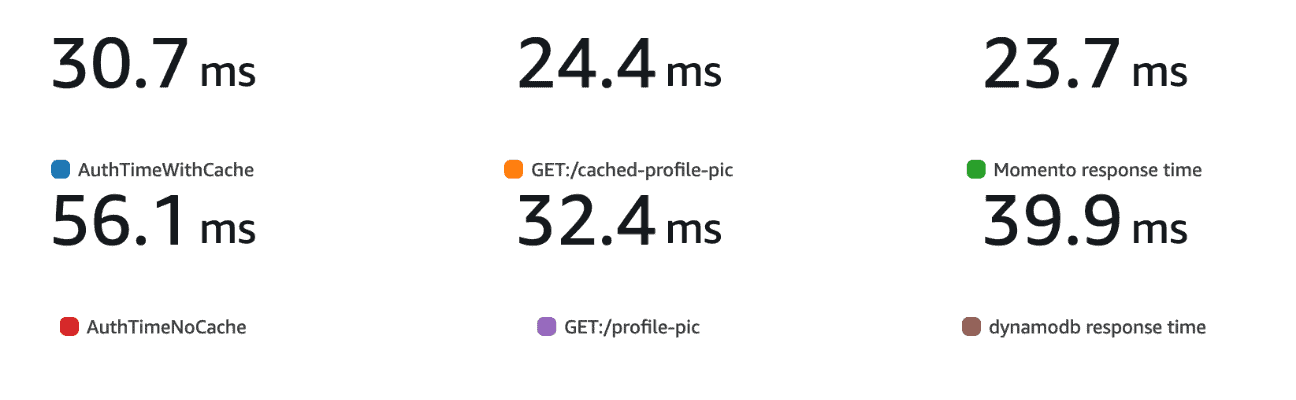

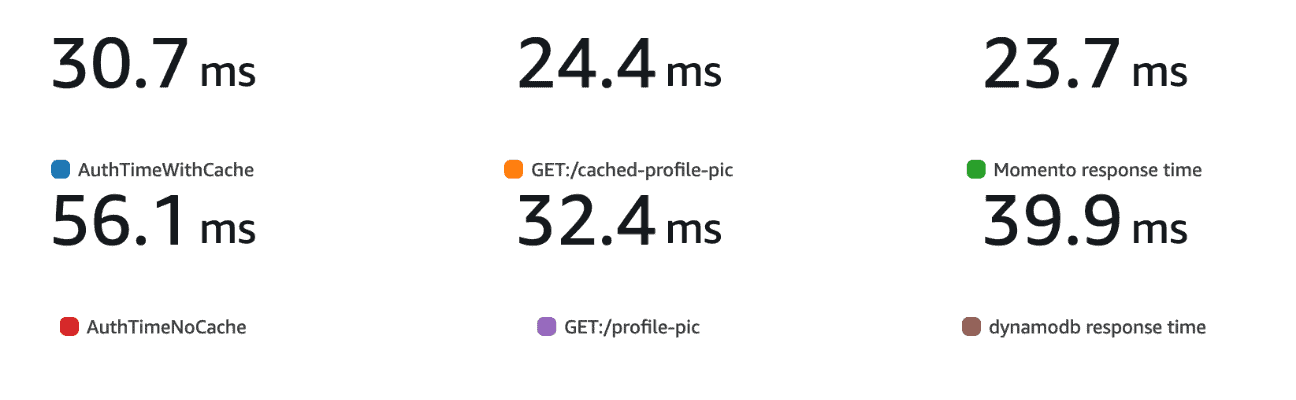

done;After running a few iterations of each of these little load generator scripts we can chart the custom metrics we report in CloudWatch to compare latency for the various parts of our serverless APIs. Looking at average response times we can see we were able to drop our latencies by adding in a remote cache for our authorizer and service.

This trend continues into the upper percentiles of our tail latencies looking at p99 response times as well.

Serverless + Remote Caching = ❤️

As you can see, we get a clear benefit from enabling caching on our custom authorizer and service for our new profile image API. With an API Gateway custom authorizer, we can pull the AuthN/AuthZ decision out from our service code, improving performance and safety for your dev teams while leveraging Momento to ensure both functions are using the same cache. While breaking your application up into multiple functions is great, it can make the need for remote caching even more important to keep things fast for your customers.