Reducing cloud infrastructure cost is easier than you think

Modify your backend caching strategy and cut your cloud infrastructure cost by 50%.

The current macroeconomic situation is not good, and it’s deteriorating by the day. Cloud-based development and platform teams are being pushed to achieve aggressive cost reduction goals—and quickly. Whenever possible, it’s essential to start with cost-efficient infrastructure that preserves availability and performance today without sacrificing the capabilities to build innovative new apps tomorrow. Due to the explosive growth of cloud computing over the past decade, many organizations chose rapid expansion in the name of near-term innovation and digital transformation gains rather than rigorous optimizations and cost controls. As a result, there are pockets of the standard cloud footprint with overlooked potential for cost control—without mortgaging the future. The trick is knowing where to look.

One of the places in the cloud stack with opportunity for cost optimization is the backend caching infrastructure.

Backend caching of databases and datastores is ubiquitous in most cloud architectures today in the same way as plumbing in a house: it’s essential, but it’s buried deep and doesn’t usually draw a lot of attention unless something goes wrong. Because of this, most organizations don’t have a strategic plan for caching.

Across many of today’s largest cloud computing customers, the caching infrastructure is typically a combination of Redis, Memcached, and misappropriated tools such as SQL database clusters. The Redis and Memcached clusters are often a random mixture of managed and self-managed services on raw compute instances or VMs, further fracturing what should be a holistic caching strategy. This cobbling together of existing solutions makes backend caching needlessly complex and blurs best practices, which results in many applications that would benefit from caching missing out—or actively avoiding it. Momento Cache changes this. It’s tailor-made for the modern cloud, embracing multi-tenancy and the serverless paradigm to create a highly performant, easy-to-use backend caching solution.

With a holistic caching strategy and the right tool to execute it, you can achieve cost savings across multiple layers of your cloud infrastructure—adding up to millions in savings for large cloud customers—all while delivering performance and uptime gains with lower operational risk. A rare win-win.

Six layers where you can reduce your cloud infrastructure cost

1. Optimize your total database spend

Databases collectively are often a top-three line item on large cloud customers’ bills, and even though backend caching is one of the best ways to improve performance, stability, and cost efficiency, it’s not always implemented. Sometimes this is due to a simple lack of awareness; sometimes it’s because a team is dealing with multiple growth cycles that result in a lack of time or resources to get it done. Regardless of the situation, it’s always going to be “too early” or “too late”. Make the time to add caching to your databases now.

Operating a data cache is cheaper than scaling up a database. CBS Sports, a Momento customer, cites that caching 90% of a typical high-volume application’s traffic requires only four managed database nodes—as opposed to 16 if caching weren’t implemented. A 75% reduction in database instances is a massive cost win, and that’s on top of performance and availability improvements!

2. Optimize your caching spend

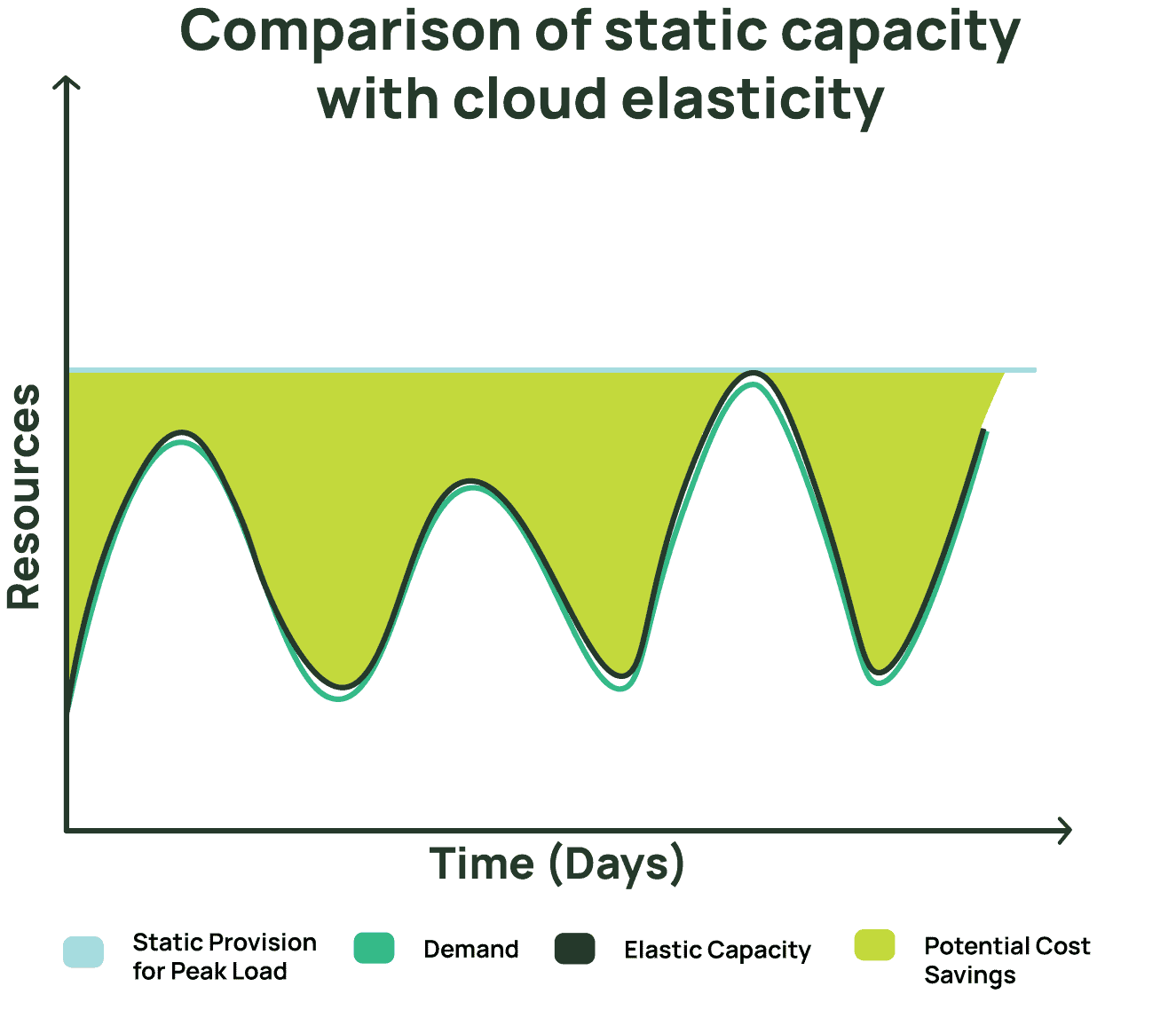

Cloud economics 101 has always been rooted in elasticity and autoscaling. Unfortunately these foundations don’t apply to legacy Redis and Memcached managed cloud services. The graph below is a common visual representation of the lowest hanging fruit for cost benefits of elastic cloud resources. All the space between the top blue line and the wavy green lines is your immediate cost savings. With static capacity, you pay for peak load all the time; with cloud elasticity, you only pay for what you use.

Managed Redis and Memcached services such as Amazon ElastiCache and Google Memorystore are single-tenant services: each customer gets their own cluster or collection of their own clusters. They are not multi-tenant like modern serverless databases such as Amazon DynamoDB or Google Firestore. This is why DynamoDB and Firestore are so efficient and why serverless matters for cost efficiency of databases in the first place. With single-tenant clusters, there is no such thing as warm capacity. Cold starting nodes can take up to 15 minutes—which your crumbling database tells you is about 14 minutes and 59 seconds too late. This forces customers to observe the antiquated model of overprovisioning for peak load—that top light blue line—and running it 24-7 to avoid outages, when their actual consumed caching load is much lower over time due to peaks and valleys of demand.

This is where Momento Cache comes in, delivering truly elastic backend caching through warm capacity made possible by a multi-tenant distributed architecture—just like DynamoDB or Firestore. By replacing legacy Redis and Memcached clusters (overprovisioned by as much as 80%) with Serverless Cache, we’ve seen customers cut their caching costs by more than half. That’s a lot of savings on a significant cloud bill line item.

3. Optimize your small object storage costs

This hidden opportunity is subtle, but it’s not uncommon.

Cloud Object Storage services like Amazon S3, Google Cloud Storage, and Azure Blob Storage are the undisputed cost leaders for durable storage. You won’t find a lower price to durably store your GBs, TBs, or PBs of data, but there is one pricing dimension to these services that’s often overlooked: you are charged per number of PUTs and GETs. These are called request fees.

If you’re not making millions of PUTs and GETs per month, you barely notice these charges. For example, in their US-East regions, Amazon S3 and Google Cloud Storage charge $0.005 per 1,000 PUTs and $0.0004 per 1,000 GETs. Seems trivial, but some customers are dealing with a huge volume of tiny objects for whom these fees become meaningful.

The questions to ask yourself are: do your small files really need the durability of these object stores? Are these files only relevant for a short time? If one of them were to go away, could you fall back to a default and regenerate it from an original source?

Think media previews (image thumbnails, downscaled video snippets, low-fidelity audio samples) or any kind of small metadata summarizing a larger object, for example. Trading some properties off for this data storage can reduce both cost and latency.

Consider this example from Momento customer Wyze Labs, a smart home company. They store thumbnail images of every video captured by their millions of motion-activated security cameras worldwide. The thumbnail files are tiny at only a few KB each, but a few hundred million of them are stored monthly. At this volume, the request fees had become 97% of Wyze’s total Amazon S3 cost for the workload. Moving to Momento Cache reduced that cost by more than 50% and improved latencies in the process.

4. Optimize your machine learning (ML) platform costs

While the cloud industry has been racing to leverage rapidly accelerating ML and deep learning innovations for the past five years, efficiency and optimization for the required infrastructure have been left in the dust.

Data Scientists are brilliant at writing algorithms, crafting predictive models, creating AI services, and generally turning data into magic—but they’re rarely cloud infrastructure experts, let alone caching experts. This is an issue because caching is a vital tool for real-time feature stores and predictions.

This lack of caching expertise within ML teams combined with highly inefficient legacy backend caching solutions and the rushed rollout of ML infrastructure create a meaningful opportunity for cost optimization. All Momento customers who have implemented our Serverless Cache in their ML workflows have streamlined their cost profile, reduced latencies, and sped up model serving times.

5. Improve developer productivity

If your developers are working with the aforementioned Redis and Memcached services, they are wasting precious cycles on operations that don’t provide core business value—like working around maintenance windows, manual sharding, data replication, managing hot keys and failovers/backups, monitoring cache hit rates, and more.

With a serverless API-first architecture, Momento Cache takes care of all of these details. Caching becomes as simple as five lines of code, meaning time back for your developers to work on business-critical tasks that deliver significant savings to your organization.

6. Eliminate costly downtime

All the manual operations required by legacy backend caching services are intricate, error-prone, and operationally risky, inevitably resulting in expensive failures and downtime. Those points of vulnerability are removed with a serverless backend caching solution. By eliminating these outages—and the lost dollars and brand damage that result—you reduce your total cost-of-ownership for caching.

Conclusion

You may not even realize what your legacy caching services are costing you, but there is no time like now to take back control. Today is a gift—that’s why they call it the present. Implement a strategic caching plan, leverage modern tools like Momento Cache, cut your cloud infrastructure cost—and fortify your architecture while you’re at it.