Oops, Momento ate 98% of my GCP Cloud Run and Firestore latencies!

Serverless caching makes it easy to reduce your Cloud Run and Firestore latencies.

In my previous blog post, I dove deep on how we could leverage Momento Cache to reduce latencies on our serverless Lambda API in Amazon Web Services (AWS). This time, I’m shifting gears to discuss how integrating Momento with Google Cloud Platform (GCP) can reduce Cloud Run and Firestore latencies.

Test Setup

Service

To quickly recap the setup for this test, we’re building a social network where each user has followers. Your frontend app has to download the names of each follower of the current user to render on the device. We expose the following APIs:

GET /users and /cached-users

Makes 1 call to Firestore (/users) or Momento (/cached-users)

Example response:

{

"id": "1",

"followers": [

"26",

"65",

"49",

"25",

"6"

],

"name": "Lazy Lion"

}GET /followers and /cached-followers

Makes 1 call to either Firestore (/followers) or Momento (/cached-followers) for the passed user ID and then N (5 for this test) additional calls to either Firestore or Momento to look up each follower name.

Example response:

{

"id": "1",

"followers": [

"26",

"65",

"49",

"25",

"6"

],

"name": "Lazy Lion"

}I’m newer to GCP and have almost exclusively focused on AWS in my career, so I was really excited to dig into the GCP developer experience. I started with finding out what I would have to change from our existing basic user service we were running in AWS to enable GCP.

The main services I needed alternatives for were:

- Database

- Metrics

- Runtime

- Infrastructure as Code (IaC)

Database

I started with the highly scientific approach of firing up my trusty search engine (DuckDuckGo ftw) and searched “best GCP alternative to DynamoDB”. The main result I saw was to use GCP Firestore as the alternative NoSQL database to AWS DynamoDB. Sweet, that was easy. Let’s give it a try!

Metrics

In AWS, I was using aws-embedded-metrics to collect metrics and export via cloudwatch logs. Some quick searching told me Google Cloud Metrics is GCP’s counterpart to Cloud Watch Metrics. This looked easy enough, and they have a node.js SDK.

Runtime

There were several options here. Google Cloud has Cloud Run, Cloud Functions, AppEngine (managed k8s), and just basic VMs. I didn’t want to deal with VM’s and overhead that comes with managing operating systems and networks, and I didnt feel like having to pop open the k8s can for a simple REST API. I considered Google Cloud Functions since I used Lambda in AWS, but I wanted to just port my simple API into a container so I could do future experiments on results running in different cloud environments more easily. So, I ended up settling on Cloud Run. It seemed like the simplest and quickest way to get up and running and gave me a path to make my service more portable in the future on GCP.

Infrastructure as Code

I’m a huge fan of IaC libraries. I think it’s the only way to be working in the cloud in 2022 and cannot imagine going back to working directly in YAML as a platform engineer. I currently use Cloud Development Kit (CDK) in AWS, and in the past I have used CDK’s Terraform extension (CDK-TF) to help me with provisioning infrastructure in GCP. This time, though, I was looking to try something new, and Pulumi’s Cloud Run TypeScript example looked simple and clean. I also wanted to showcase how it’s possible to use the best tools for the job and switch them out easily between runtimes as long as you keep your code base well-organized.

Once I had my technologies chosen, I went about implementing my service and getting up and running. I won’t really get into it in this blog, but you can check out the full repo here—and stay tuned for a few dedicated follow-up posts on multi-cloud best practices and comparing different GCP technologies.

GCP-Specific Tuning

I really did not change much from the default Pulumi Cloud Run service construct. The main thing was just tweaking a few settings so that it looked similar to my AWS Lambda runtime so we could get a similar performance comparison.

The settings that I tweaked from defaults were:

- CPU: I chose 2000m limit for each container which is equivalent to 2 vCPU in AWS. I had been using 2GB memory in AWS for my Lambdas, which also used 2 vCPU under the hood.

- Memory: I set this to 2Gi for each container to match the amount of memory I had for lambda in AWS.

- Timeout: I just reduced down to a max of 15 seconds from a max of 5 minutes 🤯.

- Container Concurrency: I reduced this to 1 to try matching how AWS Lambda handles max concurrency to each Firecracker microVM under the hood, limiting each one to handling a single request at a time. Cloud Run uses Knative under the hood, so it’s still functioning similarly to AWS Lambda, especially when I am limiting each container to handle a single request at a time.

You can see the full Pulumi Cloud Run construct code here:

const service = new gcp.cloudrun.Service("ts-api-svc", {

location,

template: {

spec: {

timeoutSeconds: 15,

containerConcurrency: 1,

containers: [{

envs: [

{

name: "RUNTIME",

value: "GCP"

},

{

name: "PROJECT_ID",

value: project

}

],

image: myImage.imageName,

resources: {

limits: {

cpu: "2000m",

memory: "2Gi",

},

},

}],

},

},

});So how was the performance?

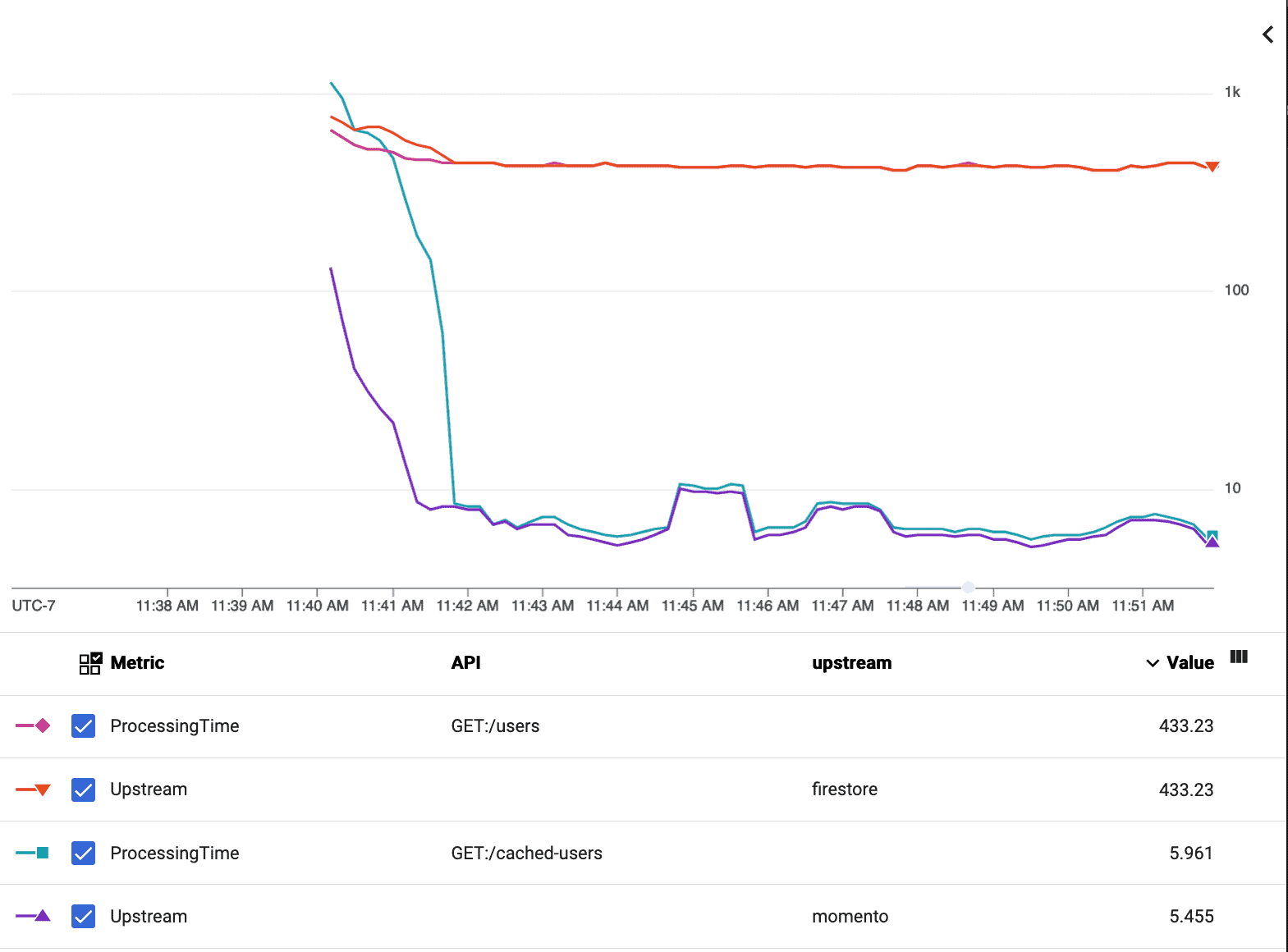

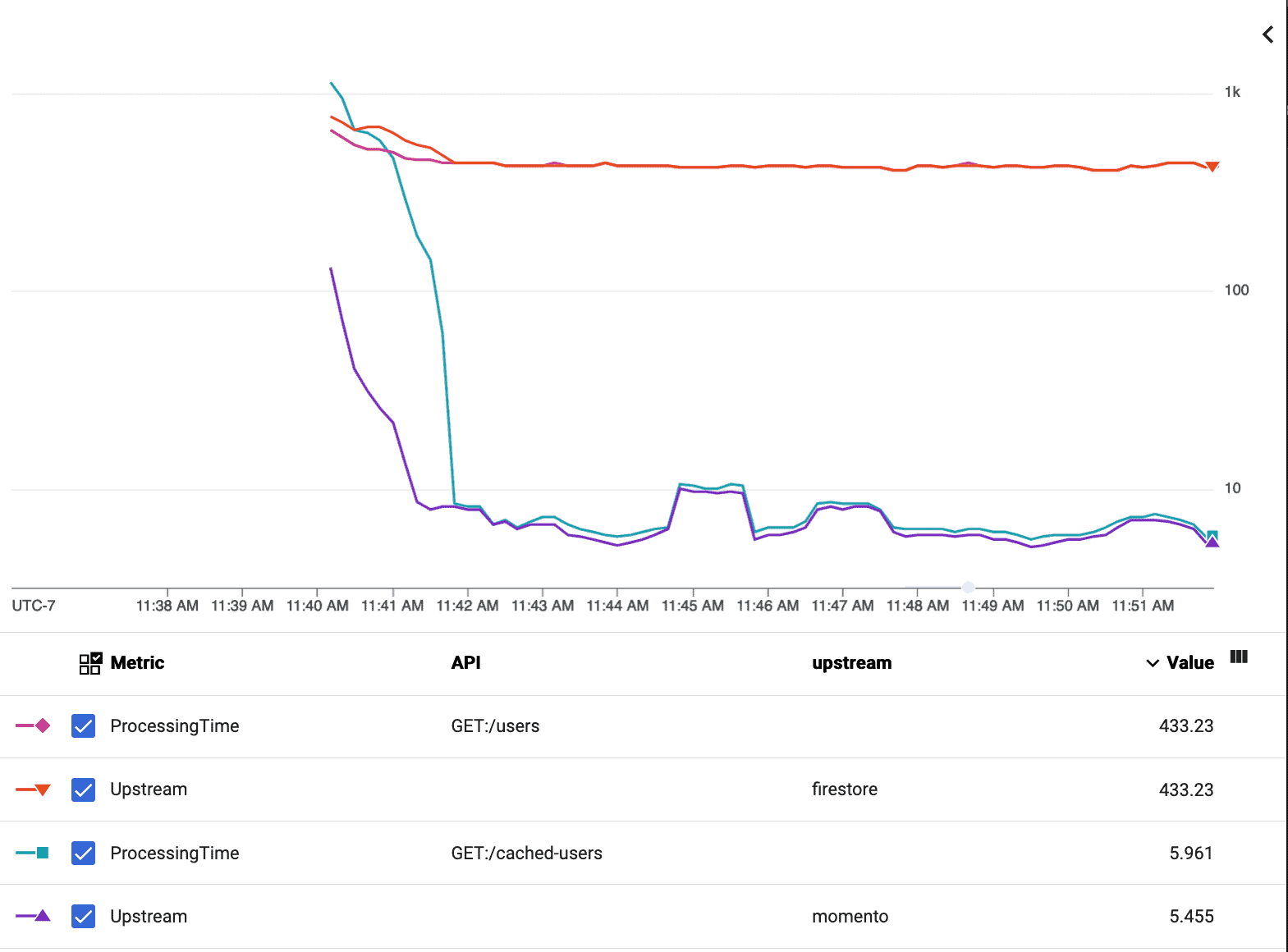

Just like in AWS, I ran some basic load off my laptop using Locust. We were running our API in GCP region us-east1 and using Momento cache and Firestore in us-east1 as well. I measured latency for both the overall API endpoints as well as time spent on requests to Momento or Firestore from the perspective of the nodejs container. Then, I recorded the timing results in Google Cloud Metrics.

Looking at Firestore latencies vs. Momento latencies for /users and /cached-users (strictly p99), there was a 98% reduction—from 433.2ms down to 6.0ms.

When you start making multiple calls to your database from a single API, you can really see how tail latencies start to stack up and the acceleration becomes even more extreme. Looking at Firestore latencies vs. Momento latencies for /followers and /cached-followers (strictly p99), there was a 99.7% reduction—from 4610.0ms to 14.1ms!

Lessons learned

Momento dropped my p99 Firestore latencies by over 98% with just a few minutes of effort. This means lower Cloud Run costs, happier users, and a more scalable system. I was really impressed by the developer experience on GCP and how quickly I could get up and running. Now I can get a consistent sub-10ms response time for my API, just like in AWS! I’m looking forward to diving deeper into comparing other cache offerings and trying out some of the other runtimes.

If you’re an engineer currently using Firestore or running your application in GCP—especially if your workloads are bursty and hard to predict (looking at you, gaming)—I highly recommend you give Momento a spin to see how it can save you money and make your users happier.

Follow me on Twitter or LinkedIn to stay up to date with my latest experiments.