How caching fits into your Amazon Aurora scaling strategy

Scaling Amazon Aurora by caching reads doesn't have to be complicated.

Amazon RDS and Aurora are popular solutions for managed relational databases in the AWS cloud—particularly for PostgreSQL and MySQL engines. While Aurora is now offered in “serverless” form (actually, I think it does pretty well on our serverless litmus test—but scaling in units that have fixed memory/compute/network ratios is not ideal), the fundamentals are always the same. In this article, I’m going to tease apart those fundamentals so you can understand when it makes sense to add more replicas, and when caching makes more sense.

A replica here, a replica there… consistently replicating everywhere?

Looking under the hood at RDS/Aurora, you’ll find a writer replica, and (optionally) a number of reader replicas. The writer replica owns the authoritative view of your data at any given moment. All writes are applied via the writer, and if you want to immediately see the effect of those writes with a follow-up read, you’ll have to make your read queries through the writer replica too. This is called “read-after-write consistency”. The reader replicas are not read-after-write consistent because the replication is asynchronous. But they are transactionally consistent—the changes are replicated from writer replica to reader replicas in order, and multi-row changes made within a transaction are applied atomically (as one). When you read from a reader replica, you’ll get a result with rows that make sense (relative to each other), but it may not be the very latest view. These “eventually consistent” reads are fine, most of the time—after all, by the time your client receives a read response to work with the data may have been changed in the database by some other thread. Want to know more about various forms of consistency? Check out this very thorough article by Alex DeBrie.

The writer replica has lots of stuff to do—maybe more than a reader

The writer replica handles all changes— so in a write-heavy workload, you can expect to see this in your metrics. And a writer’s work is never done—there’s also work involved in the replication itself. In Aurora, much of the replication work is handled within the storage layer (for which there is a fixed number of copies of the data—it’s six!) but there is still some effort involved in keeping each reader replica in sync. In RDS, all that work has to be done by the replicas themselves—no fancy shared storage layer. A key point is this: the more replicas you have, the more work being done to manage replication— this is concentrated on the writer replica. So, why add replicas? And how many is enough?

The durability factor

If you’re working with a production database, chances are that you like the idea of durability. There are actually two ways to think about this: 1/ resiliency against data loss in long term storage, and 2/ redundant persistent storage of each new write so that the failure of a single component (and subsequent failover of the writer replica) doesn’t make those latest changes disappear before they are fully replicated for longer term storage.

The good news is that Aurora takes care of #1 for you— its storage layer is inherently highly durable. For RDS, you need to think about #1 yourself—but if you have a total of three replicas across three AZs, that’s probably as durable as you can get—beyond three the durability improvement rapidly diminishes. And you’re also getting backups in S3!

For durability type #2, you just need to ensure that you’re running a multi-AZ configuration—at least two replicas, and preferably three. Beyond three, you really aren’t gaining much in durability.

What of availability?

People probably want their database to be available too—right? Well, ideally you want to be able to continue to support those durable writes even if one replica (or AZ) is unavailable. So the magic number of replicas is again, three. Three is probably also the number of AZs you are operating your application across if you want to deliver high availability for your customers. Is it a coincidence that AWS builds regions with a three AZ minimum? I think not. It’s fair to say that three database replicas across three AZs is enough—adding more replicas is just an expensive way to support more (eventually consistent) reads.

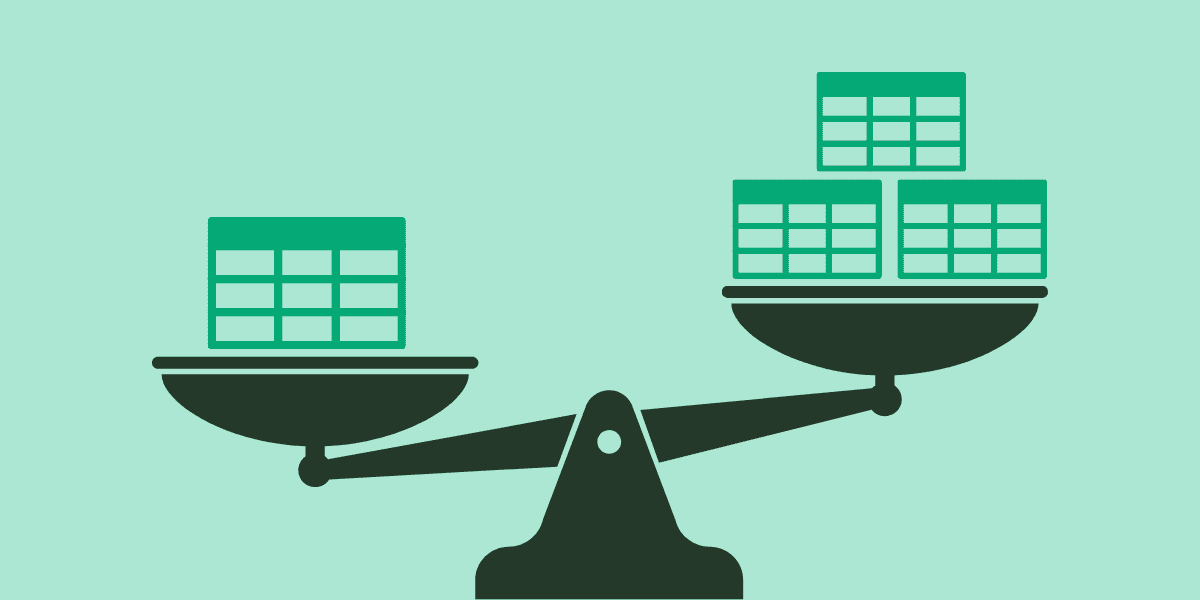

Why not scale out eventually consistent reads using a cache?

Great question! We’ve learned that beyond three database replicas, we’re really not gaining much in terms of durability or availability—and we’re only increasing our ability to serve eventually consistent reads. Caches are built for serving eventually consistent reads—the type you might send to the reader replica in Aurora or RDS. They also deliver improved performance, and they are terrific at absorbing load spikes to protect databases from overload (giving you more time to manage things like vertical scaling of replicas). While caching database reads is quite common, it’s not ubiquitous—and the reason is that adding caching used to be complex, and potentially costly at low scale (there’s a high minimum buy-in price for traditional cache clusters that makes them untenable for low throughput needs).

Momento has changed the equation with a truly serverless cache offering—you can now apply caching at any scale without taking on additional operational burden – and you’ll only pay for actual consumption. Today, it makes sense to build a side cache into your application from the beginning if there are queries which do not require strong read-after-write consistency—it will help you to scale out databases like Aurora and RDS smoothly and cost effectively from the start. I encourage developers to look for simple integration opportunities—use built-in frameworks for caching (such as Laravel for PHP), or use middleware to centrally wrap database queries.

Try Momento Cache today to learn how simple it can be to add the benefits of caching to Aurora and RDS. Your application users will appreciate the low latency experience and you’ll sleep better knowing you’ve optimized your Aurora or RDS cluster’s scaling path.